Continuing the discussion from Now what?:

Hi @phpguru!

I took the liberty of turning this into a new topic for you ![]()

Regarding the tricky movies that you describe here, we already have a system in place to deal with this, at least in part:

-

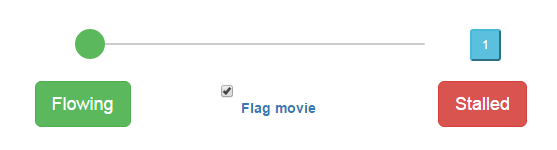

If at any point you believe that the movie is tricky to score, you can just Flag movie, and the experts will check it:

-

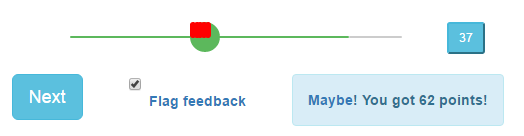

If you believe you can score the movie (cool that you are noticing subtleties like that in difficult movies, by the way!), but you see that the experts and/or the crowd disagrees with you, you can Flag feedback itself on the feedback screen!

Do not hesitate to do this, if you think you’re onto something important. That’s what we’re counting on you to do! The experts will take a look at the flagged movies. -

In regards to the experience of individual users - this is what Sensitivity is planned for. At the moment, Sensitivity already determines your score - you get more points if you are “better” at catching stalls, although that can fluctuate, as I am sure you’ve noticed. In the future we are planning to take Sensitivity into account when weighing individuals answers as well. This will allow us to assign more “weight” to answers of highly sensitive users, and also to get confident answers from a smaller group of people, providing some of them were “close to experts” at annotating movies.

Please let us know if this is what you had in mind. We are open to ideas about improving these, of course ![]()

Best,

Egle

Hi Egle, very interesting point 3!, How many views do you think it will take per movie to get a good read? Looks like it could be up to about 10 for what we’ve been looking at currently, by the end of the contest.

Re weighting, does each person’s current sensitivity get stored along with their response? With the way the sensitivity gets dinged if someone happens to miss (or ahem, disagree with) a couple of calibrations in rapid succession, you may end up discounting some of your experts unnecessarily for the real clips they judge during one of those “sensitivity valleys.”

Or would you be using their average sensitivity over a window of time or something?

Dear LotteryDiscountz,

Thank you for the insightful questions! Please see below…

Best wishes,

Pietro

The research requirements for the Cornell studies involve very high data standards: Specificity and Sensitivity both need to be greater than 95%. That’s even more challenging that having an accuracy level that high. We conducted a validation study at the beginning of this project, which revealed we need between 15 and 20 annotations per vessel to achieve that quality level. So, today, we collect 20 annotations per vessel.

Yes - exactly. We are currently working on a method to assign weight to a user’s response based on their sensitivity, which will mean we can collect fewer annotations per vessel. This method will also adjust for individual response bias. Once it has been validated, we can even apply it retroactively. This is currently our highest research priority in EyesOnALZ.

The sudden drop associated with getting dinged for a few incorrect (or disagreed with) annotations is an unavoidable characteristic of our unique method, which ensures that all contributions are helpful, and that web bots or malicious actors cannot adversely influence the data. Another key aspect of this method is that it adjusts rapidly to intraday fluctuations in a user’s sensitivity. For example, many people naturally experience an afternoon “slump”. We also have Alzheimer’s patients participating, who also have moments of higher or lower lucidity, and this ensure they can contribute beneficially anytime they play.

Also, as we continue to curate and build our library of calibration vessels, we hope to reduce disagreements and increase learning opportunities.

Sensitivity is indeed windowed, but the “blue tube” (label credited to @evelynrsmith) already reflects the windowed value. The window size was chosen to balance between statistical power and responsiveness.

I hope that’s helpful and thanks again for asking!

Very best,

Pietro

Thanks so much for the background, Pietro! I’m really glad to hear how even patients can contribute the way y’all have it set up.

Continuing the discussion from Random Presentation of Movies

@MikeLandau wrote:

One of the things I get frustrated with is that sometimes I see spots that look like stalls, but they’re not, so I guess that must mean that they didn’t stay in one place long enough to be considered a stall. Is there a certain number of frames that a spot must be stationary before it is considered a stall?

The vessel imaging takes place for a very short period of time. While it may be possible for a stall to release during the movie, it would be extremely rare. Assume a stalled red blood cell (dark spot) will remain in place for the full time that the vessel is visible in the movie. Sometimes we see dark spots that appear to stick for a few frames, and it is sometimes multiple red blood cells that each happen to stop in the same spot. Overall, however, we observe the red blood cells flowing through the entire vessel. So if you see several dark spots moving and only one appearing to stay stationary, it may just be an optical illusion. Make your judgement based on the flow of the entire vessel. If a stall exists, the entire segment (one branch to the next branch) will not be flowing. No dark spots will be moving in the targeted segment. You may see a single red blood cell stalled or several of them in a vessel, but you should never see one blood cell stalled and others flowing in the same vessel.

Hope this makes sense.

@gcalkins .